Event: Discussion of AI Ethics with AI / IT Professionals in Nepal

- Post by: Aatiz Ghimire, Shreyasha Paudel

- April 3, 2022

- No Comment

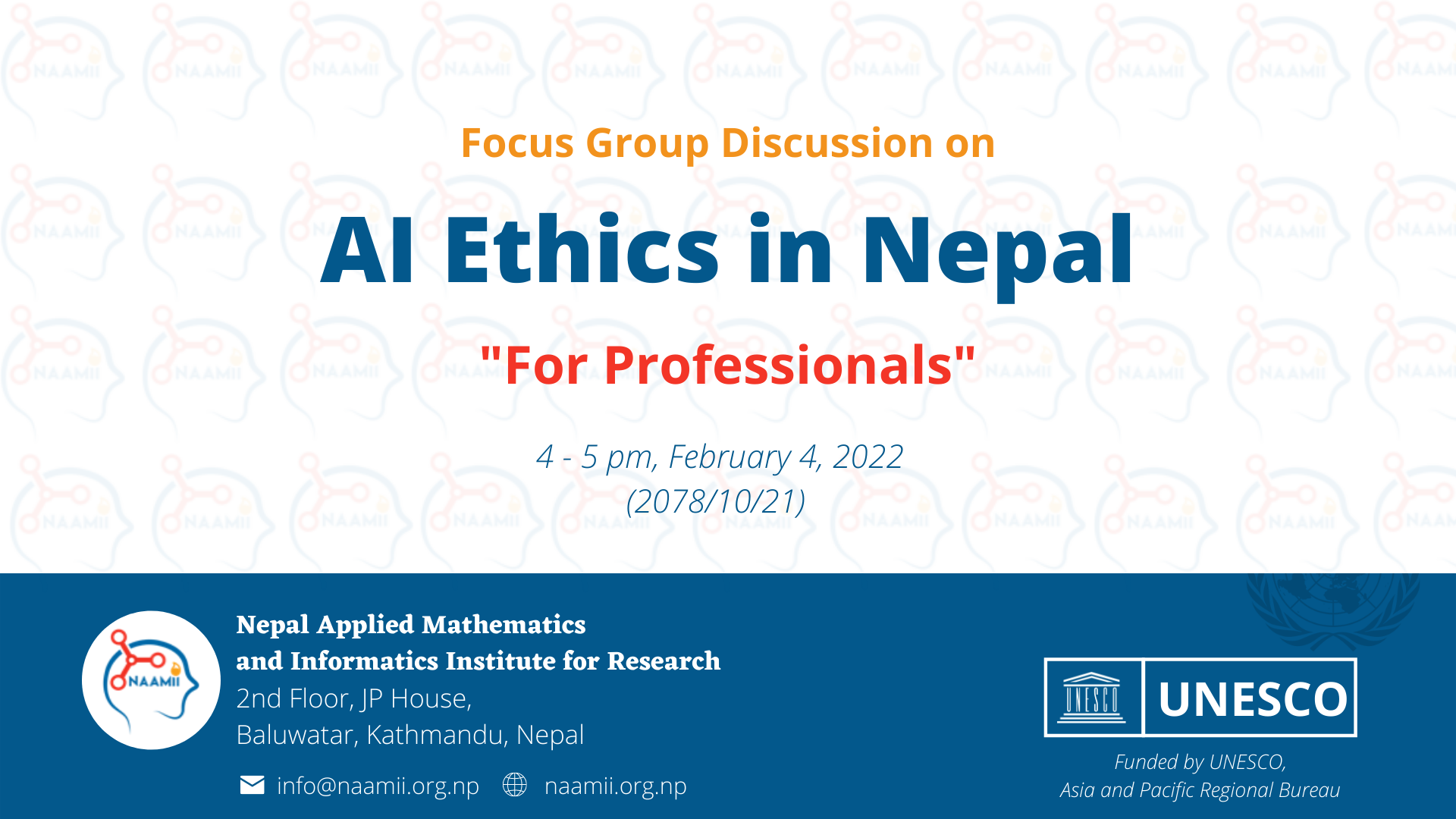

The Focus Group Discussion (FGD) with professionals was held on 4 February 2022. The target group for the discussion event was professionals working in IT industry in Nepal and the main goal of this event was to get a more in-depth understanding of their understandings and concerns about AI industry and AI ethics in the context of Nepal. The discussion ran for one and a half hours, was attended by eight participants, and was moderated by NAAMII’s AI Ethics Team.

The attendees at the FGD consisted of professionals from diverse work areas such as Natural Language Processing, AI Data Transcription, Computer Vision, Drone and Robotics, and Data Science. The group also had a mix of experience levels including a company founder, senior-level manager, AI researcher, engineer, and AI curriculum developer. While diverse in work areas, the group was unfortunately homogeneous in terms of gender. Unfortunately, all the invited participants were not able to attend and thus the event Though we had invited equal number of males and females, due to scheduling conflicts, all the participants who could attend this event were male. As a result, the perspectives presented might be limited.

The discussion topics covered the participants’ interest in AI ethics, ethical challenges they have seen in personal and professional life, individual, organizational, and policy interventions needed to build ethical AI, and lastly their ideas on how to build an AI Ethics community in Nepal.

The participants were very concerned about power concentration due to AI. They mentioned the need for large amount of data and resources to build better AI products and the lack of local datasets and resources in Nepal. They also talked about the risks associated with the way the majority of IT companies in Nepal sustain themselves through building outsourced products for international clients. AI can further exacerbate this risk by automating many of such jobs out of existence. The participants felt that Nepali AI tech companies have mostly been building outsourced products for short-term gain while failing to develop AI that is contextualized to Nepali community which would improve capacity in the long run. They suggested that the government should build a better policy and guidelines while building (design, development and deployment) and buying AI systems, products and infrastructure which are expensive for low GDP countries. They felt that having products catered to needs of Nepali people would help keep stakeholders accountable and also help with building an ethical and responsible AI.

In terms of ethical problems that they have seen throughout their careers, the participants were mostly concerned about the use of data and privacy concerns. They pointed out the necessity of data sharing for the use of service in their business. The participants were aware of incidents of AI bias in the global context but did not think such bias existed in their workplace or was of immediate risk to Nepal. The participants also felt that their own ethics and moral values helped prevent biases from creeping into their work. This thread of discussion led to some contradictions and disagreement. Some participants suggested that human bias creates an AI bias, due to individual rational beliefs or choices. Some participants strongly felt about the need for AI specific guidelines and policies while others thought that adhering to individual morality and democratic values would be sufficient and the case of AI was no different than building any other product. The former group pointed out it will be too complex for us to build an AI system or autonomous system considering our democratic values, while we have an individual choice based on different cases. They pointed out that companies are using AI as a branding business model for their service and product, which may give rise to ethical issues. The latter group agreed with the need for more awareness to increase AI literacy and felt that more policies will only lead to more bureaucracy.

Another area for disagreement was in discussing the tradeoffs between improving AI model and the risk of job loss in a country like Nepal as AI models replace many outsourced jobs. This disagreement came directly from the experience of the participants. The participant concerned about potential job loss was a senior-level manager for a data annotation subsidiary of an international AI company while the participants advocating for improved AI model owned a product company for Nepali market. The latter believed that it is normal for the nature of human jobs to evolve as technology develops and this should not be an ethical concern for AI developers. Rather, it should be the government’s job to plan a smooth economic transition.

To conclude, the participants iterated on the complexity of ethics related topics and the need for ongoing discussions. As a way forward, the participants had a discussion on building an AI ethics community. They suggested that such a community should include all stakeholders from Political Leaders, Social Scientists, Philosophers, University Professors who have earned integrity and respect from society. Some participants also brought ongoing trend of Nepali companies creating AI related communities as a branding and hiring tool and abandoning them once the company’s need is met. The participants felt that to be sustainable an AI ethics community should be intentional in its goal without any conflict of interests. It should also have recurring events, make it free to join, and provide incentives members to share their views freely.

Both Professionals and Policymakers are aware that majority of Nepali AI companies and professionals do outsourced works and are concerned about potential of power concentration and labor exploitation by multinational companies. Both professionals and policymakers agree on the need for ethical guidelines and policy for AI. The majority of professionals are aware of global issues, but unaware of how those issues may manifest in their own jobs. They believe that it is necessary for diverse stakeholders to come together and take responsibility. Students and Professionals have had similar views about data, privacy, liability, fairness and bias, accountability, safety and limitedness of AI resources in Nepal but students are concerned about their updating old curriculum and adding ethical aspects of AI in it. Professionals felt that curriculum can never be enough and it is more important to learn on the job and provide adequate opportunities for younger generation to do so.

This project is generously funded by a grant from UNESCO Asia and Pacific Bureau.